WWDC 2017 Initial Impressions

Check out a developer’s initial impressions of the new announcements at WWDC 2017 so far! By Andy Obusek.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

WWDC 2017 Initial Impressions

15 mins

- Xcode 9

- New Editor

- Refactoring

- Swift 4

- Xcode ❤️s GitHub

- Wireless Debugging

- Simulator Enhancements

- Testing

- Speed

- iOS 11

- Drag and Drop

- ARKit

- Machine Learning

- Vision

- HEVC and HEIF

- MusicKit

- AirPlay 2

- iOS Rapid Fire

- Hardware

- HomePod

- Refreshed Desktops

- iMac Pro

- New iPad Pro

- What Are People Excited About?

- Where To Go From Here?

ARKit

WWDC 2017 introduced ARKit, a framework that provides APIs for integrating augmented reality into your apps. On iOS, augmented reality mashes up a live view from the camera, with objects you programmatically place in the view. This gives your app’s users the experience their content is a part of the real world.

Augmented reality programming without some sort of help can be very difficult. As a developer you need to figure out where your “virtual” objects should be placed in the “reality” view, how the objects should behave, and how they should appear. This is where ARKit comes to the rescue and simplifies things for you the developer.

ARKit can detect “planes” in the live camera view, essentially flat surfaces where you can programmatically place objects so it appears they’re actually sitting in the real world, while also using input from the device’s sensors to ensure these items remain in the correct place. Apple’s article on Understanding Augmented Reality is a good place to start for learning more.

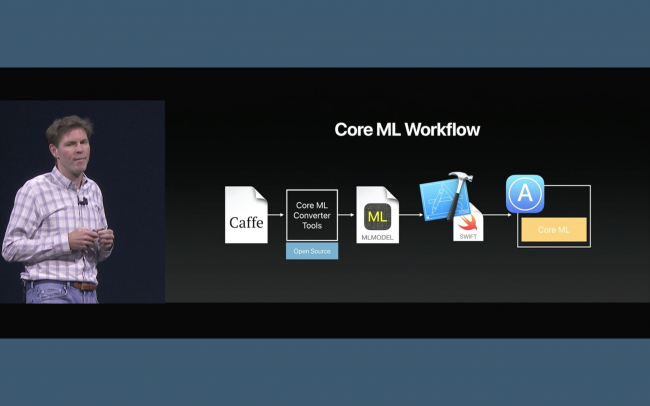

Machine Learning

Machine learning is an incredibly complicated topic. Luckily, the Core ML framework was released today to help make this advanced programming technique available to a wider audience. Core ML provides an API where developers can provide a trained model, some input data, and then receive predictions about the input data based on the trained model.

Right from their documentation on Core ML, Apple provides a good example use case for Core ML: predicting real estate prices. There’s a vast amount of historical data on real estate sales. This is the “model.” Once in the proper format, Core ML can use this historical data to make a prediction of the price of a piece of real estate based on data about the house, like the number of bedrooms (assuming the model data contains both the ultimate sale price, and the number of windows for a piece of real estate). Apple even supports trained models created with certain supported third party packages.

Vision

The iOS 11 SDK introduces the Vision framework. The Vision framework provides a way for you to do high performance image analysis inside your applications.

While face detection has been available already in the CoreImage framework, the Vision framework goes further by providing the ability to not only detect faces in images, but also barcodes, text, the horizon, rectangular objects, and previously identified arbitrary objects. The Vision framework also has the ability to integrate with a Core ML model. This allows you to create entirely new detection capabilities with the Vision framework.

For example, you could integrate a Core ML trained machine learning model to identify dogs drinking water in pictures (you just need to obtain the model first which helps the Vision framework understand how to look for a dog drinking water). Take a look at the Vision framework documentation for more information.

raywenderlich.com team members at WWDC 2017

HEVC and HEIF

Support for two new media formats was introduced with iOS 11: High Efficiency Video Coding (HEVC) and High Efficiency Image Format (HEIF). These are contemporary formats that have improvements in compression, while also recognizing today’s image and video assets are more complicated than they were in the past. Technologies like Live Photos and burst photos require more information be kept for a given image.

For example, a Live Photo may designate a “key frame” to be the thumbnail to represent the sequence of images. These new formats provide a more convenient, and smaller, way to store and represent this data. The following frameworks have been updated to support these new formats: VideoToolbox, Photos, and Core Image.

MusicKit

I’m a huge fan of music, and I’m sad to say that I traded in my Apple Music subscription for a Spotify subscription about two months ago. The changes coming with the new MusicKit framework could single-handedly bring me back as a paying Apple Music customer. One of my biggest frustrations as an Apple Music customer was the lack of access to the streamable music from within third-party applications.

There isn’t much documentation on MusicKit yet, but Apple presented that there will now be programmatic access to anything in Apple Music. Not just songs the user owns, but all the streamable music.

AirPlay 2

And related to music, AirPlay 2 is also new with iOS 11. It’s most notable feature is the added ability to stream audio across multiple devices. Think Sonos, but with your AirPlay 2 supported devices!

In for your music or podcast playing app to take advantage of this, you’ll need to call setCategory(_:mode:routeSharingPolicy:options:) on AVAudioSession with the AVAudioSessionRouteSharingPolicyLongForm parameter value.

iOS Rapid Fire

There were a bunch of other miscellaneous updates that caught my eye as well:

- App Store Redesign – Coming with iOS 11 is a redesigned App Store app. It looks a lot like Apple Music, and has separate tabs for Apps and Games.

- Promote in-app purchases on the App Store – Got a new in-app purchase that you would like users aware of? You can now promote in-app purchases on the App Store, and additionally, in-app purchases can also be featured on the App Store by Apple!

- Live Photo adjustments – You can now edit Live Photos to do things like loop the Live Photo, or blur the moving parts.

- New SiriKit Domains and Intents – Enhancements to SiriKit now make it possible to add notes, interact with to-do lists and reminders, cancel rides, and transfer money.

- Annotate Screenshots on iPad – When taking a screenshot on your iPad, you’ll be able to quickly access the screenshot via a thumbnail in the corner of the screen, and then annotate it with markup.

- Phased App Releases – Now you can slowly release your app over a period of time. If you’re worried about a new app update putting a heavy load on your server-side backend, this can be a way to mitigate that risk.

Hardware

Another question we love to ask ourselves each WWDC is “what new hardware will we buy?” :]