Augmented Reality iOS Tutorial: Marker Tracking

Learn how to use marker tracking to display a 3D image at a given point in this augmented reality iOS tutorial! By Jean-Pierre Distler.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Augmented Reality iOS Tutorial: Marker Tracking

15 mins

Make augmented reality, a reality!

It’s time to use String to bring something to your display. Open ViewController.m and add the following import right after the line #import "ViewController.h":

#import "StringOGL.h"

This imports the String SDK. Now add the following instance variables and property to the @interface part:

- int _numMarkers

- struct MarkerInfoMatrixBased _markerInfoArray[1]

- GLKMatrix4 _projectionMatrix

- @property (nonatomic, strong) StringOGL *stringOGL

Your interface should now look like this

@interface ViewController () {

GLKMatrix4 _modelViewProjectionMatrix;

GLKMatrix3 _normalMatrix;

float _rotation;

GLuint _vertexArray;

GLuint _vertexBuffer;

struct MarkerInfoMatrixBased _markerInfoArray[1];

GLKMatrix4 _projectionMatrix;

//1

int _numMarkers;

struct MarkerInfoMatrixBased _markerInfoArray[1];

GLKMatrix4 _projectionMatrix;

}

@property (strong, nonatomic) EAGLContext *context;

@property (strong, nonatomic) GLKBaseEffect *effect;

//2

@property (nonatomic, strong) StringOGL *stringOGL;

- (void)setupGL;

- (void)tearDownGL;

@end

Lets see what these variables are good for.

- _numMarkers stores the number of detected markers. With the demo version of String this can only be 0 or 1.

- _markerInfoArray[1] stores information about the detected markers, like their position and rotation on the screen. Again you use a size of 1, because this version of String can only track one marker. Normally the size would be the count of all markers you want to track.

- _projectionMatrix is used for drawing.

- stringOGL holds a reference to String for later use.

Next, find viewDidLoad and add the following lines at the end:

self.stringOGL = [[StringOGL alloc] initWithLeftHanded:NO];

[self.stringOGL setNearPlane:0.1f farPlane:100.0f];

[self.stringOGL loadMarkerImageFromMainBundle:@"Marker.png"];

Let’s have a look at what’s happening here:

Note that String will automatically take care of reading input form the device’s camera, processing the frames (to look for markers and call your custom code to draw any 3D geometry you might want to add), and displaying the results.

- This creates String and sets the coordinate system to be right handed. This parameter describes whether the coordinate system youâre using is left-handed or not. In this case, you’re using a right-handed coordinate system, because that’s the OpenGL default. So

NOis passed as it’s not left-handed. For more information on this, see here.Note that String will automatically take care of reading input form the device’s camera, processing the frames (to look for markers and call your custom code to draw any 3D geometry you might want to add), and displaying the results.

- Next you set the near and far clipping-plane. This describes the area inside you can see objects in your OpenGL world. Every object that is outside this area will not be drawn to the screen.

- Finally, you load the marker image. This is the image String is looking for in the camera input.

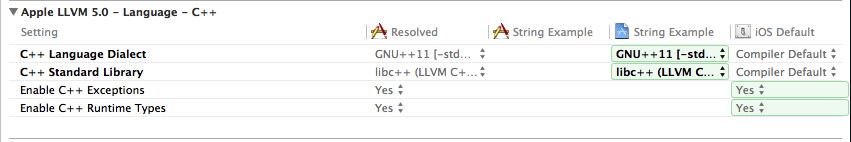

Build and run and you should get a lot of linker errors telling you about vtables and operators. The problem is that String is written in C++ and needs the C++ standard library to work. If you look in the Apple LLVM 5.0 – Language – C++ section of your build settings you can see that everything looks right. So whats the problem?

Even if String uses C++ internally, you don’t use it in your project right now and so the needed C++ library is not linked to your project. To get rid of this errors you need to use C++ in your project, but don’t panic you don’t have to write any line of C++ code. The only thing you have to do is to create a new file [cmd] + [n] and select C and C++ (1) C++ Class (2).

Save it as Dummy.cpp and Build your project again. That looks much better now, but at the moment there is nothing to see from the camera output. It just looks like the default output from the template.

Getting the camera output on the screen

The first step is to remove the following methods:

- update (only the content)

- glkView:drawInRect: (only the content)

- loadShaders

- compileShader:type:file:

- linkProgram:

- validateProgram:

Remove the line [self loadShaders]; from setupGL.

Search update and add the line [self.stringOGL process];

The last step is to add the line [self.stringOGL render]; to glkView:drawInRect:.

process starts the attempt to detect markers on the latest captured frame.

render renders the latest frame to the screen.

Build and run and you see the captured frame sod your camera.

Drawing a cube

In the last step you use the detected marker to draw a cube and rotate it dependent on the marker position.

Add this lines at the end to update:

[self.stringOGL getProjectionMatrix:_projectionMatrix.m];

_numMarkers = [self.stringOGL getMarkerInfoMatrixBased:_markerInfoArray maxMarkerCount:1];

The first line gets the projection matrix that is used for rendering your scene. The next line gets the marker information from String. Lets have a look on the parameters for this method.

- markerInfo is a C array that stores the information for each detected marker. Like mentioned before the array must have the of the total marker count you want to track.

- maxMarkerCount this is the maximum count of marker information you want to receive. Let’s say you want to track five markers in your app. If you pass a value of two you will receive only information for two markers even if there were four markers detected.

The return value tells you how many MarkerInfo was written to your array. So if you want to track four markers in this frame and there are only two detected the method will return two.

Now add this to glkView:drawInRect:

glBindVertexArrayOES(_vertexArray);

//1

_effect.transform.projectionMatrix = _projectionMatrix;

//2

for(int i = 0; i < _numMarkers; i++) {

//3

_effect.transform.modelviewMatrix = GLKMatrix4MakeWithArray(_markerInfoArray[i].transform);

float diffuse[4] = {0,0,0,1};

diffuse[_markerInfoArray[i].imageID % 3] = 1;

_effect.light0.diffuseColor = GLKVector4MakeWithArray(diffuse);

[_effect prepareToDraw];

glDrawArrays(GL_TRIANGLES, 0, 36);

}

Let's have a look on the interesting stuff of this method.

Where To Go From Here?

Build and run the project. You should see the cube only when the marker is on the screen, and the cube will rotate a little when you tilt or move your device. This way, you can have a look at the top, bottom and sides of the cube.

Note: Your cube may flash from time to time, depending on the screen on which youâre displaying your marker (retina vs. standard) and on the lighting of your surroundings. If you have trouble, try changing rooms or monitors.

Here is an example project with the code from this part of the augmented reality iOS tutorial series.

Note: Because of the beta status we can not provide you the whole project. I removed the libString.a, to compile the project you have to add your own copy of the lib.

At this point, you have a great start toward making your own augmented reality app. With a little OpenGL ES knowledge, you could extend this app to display a model of your choice instead of the 3D cube. You might also want to add in additional features like the model responding to your touches, animation, or sound.

Also, I want to mention another SDK for marker-based AR named Metaio that can also track 3D markers. Metaio is C++ based so you have to mix C++ with Objective-C (referred to as Objective-C++). The basic version is free, so be sure to check it out and see which is the best fit for you.

Want to learn more about augmented reality? Check out our location based augmented reality iOS tutorial, where you'll learn how make an app that displays nearby points of interest on your video feed.

In the meantime, if you have any comments or questions, please join the forum discussion below!

- This sets the projection matrix on your GLKBaseEffect.

- Here you start a for loop to iterate over all detected markers. Again you track only one marker so the loop is unnecessary, but it shows you how to work with more markers.

- This line gets the transformation of the detected marker and applies it to your effect.

Build and run the project. You should see the cube only when the marker is on the screen, and the cube will rotate a little when you tilt or move your device. This way, you can have a look at the top, bottom and sides of the cube.

Note: Your cube may flash from time to time, depending on the screen on which youâre displaying your marker (retina vs. standard) and on the lighting of your surroundings. If you have trouble, try changing rooms or monitors.

Where To Go From Here?

Here is an example project with the code from this part of the augmented reality iOS tutorial series.

Note: Because of the beta status we can not provide you the whole project. I removed the libString.a, to compile the project you have to add your own copy of the lib.

At this point, you have a great start toward making your own augmented reality app. With a little OpenGL ES knowledge, you could extend this app to display a model of your choice instead of the 3D cube. You might also want to add in additional features like the model responding to your touches, animation, or sound.

Also, I want to mention another SDK for marker-based AR named Metaio that can also track 3D markers. Metaio is C++ based so you have to mix C++ with Objective-C (referred to as Objective-C++). The basic version is free, so be sure to check it out and see which is the best fit for you.

Want to learn more about augmented reality? Check out our location based augmented reality iOS tutorial, where you'll learn how make an app that displays nearby points of interest on your video feed.

In the meantime, if you have any comments or questions, please join the forum discussion below!

Note: Your cube may flash from time to time, depending on the screen on which youâre displaying your marker (retina vs. standard) and on the lighting of your surroundings. If you have trouble, try changing rooms or monitors.

Note: Because of the beta status we can not provide you the whole project. I removed the libString.a, to compile the project you have to add your own copy of the lib.