Image Processing in iOS Part 1: Raw Bitmap Modification

Learn the basics of image processing on iOS via raw bitmap modification, Core Graphics, Core Image, and GPUImage in this 2-part tutorial series. By Jack Wu.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Image Processing in iOS Part 1: Raw Bitmap Modification

25 mins

Imagine you just took the best selfie of your life. It’s spectacular, it’s magnificent and worthy your upcoming feature in Wired. You’re going to get thousands of likes, up-votes, karma and re-tweets, because you’re absolutely fabulous. Now if only you could do something to this photo to shoot it through the stratosphere…

That’s what image processing is all about! With image processing, you can apply fancy effects to photos such as modifying colors, blending other images on top, and much more.

In this two-part tutorial series, you’re first going to get a basic understanding of image processing. Then, you’ll make a simple app that implements a “spooky image filter” and makes use of four popular image processing methods:

- Raw bitmap modification

- Using the Core Graphics Library

- Using the Core Image Library

- Using the 3rd-party GPUImage library

In this first segment of this image processing tutorial, you’ll focus on raw bitmap modification. Once you understand this basic process, you’ll be able to understand what happens with other frameworks. In the second part of the series, you’ll learn about three other methods to make your selfie, and other images, look remarkable.

This tutorial assumes you have basic knowledge of iOS and Objective-C, but you don’t need any previous image processing knowledge.

Getting Started

Before you start coding, it’s important to understand several concepts that relate to image processing. So, sit back, relax and soak up this brief and painless discussion about the inner workings of images.

First things first, meet your new friend who will join you through tutorial…………drumroll……Ghosty!

Boo!

Now, don’t be afraid, Ghosty isn’t a real ghost. In fact, he’s an image. When you break him down, he’s really just a bunch of ones and zeroes. That’s far less frightening than working with an undead subject.

What’s an Image?

An image is a collection of pixels, and each one is assigned a single, specific color. Images are usually arranged as arrays and you can picture them as 2-dimensional arrays.

Here is a much smaller version of Ghosty, enlarged:

The little “squares” in the image are pixels, and each one shows only one color. When hundreds and thousands of pixels come together, they create a digital image.

How are Colors Represented in Bytes?

There are numerous ways to represent a color. The method that you’re going to use in this tutorial is probably the easiest to grasp: 32-bit RGBA.

As the name entails, 32-bit RGBA stores a color as 32 bits, or 4 bytes. Each byte stores a component, or channel. The four channels are:

- R for red

- G for green

- B for blue

- A for alpha.

As you probably already know, red, green and blue are a set of primary colors for digital formats. You can create almost any color you want from mixing them the right way.

Since you’re using 8-bits for each channel, the total amount of opaque colors you can actually create by using different RGB values in 32-bit RGBA is 256 * 256 * 256, which is approximately 17 million colors. Whoa man, that’s a lot of color!

The alpha channel is quite different from the others. You can think of it as transparency, just like the alpha property of UIView.

The alpha of a color doesn’t really mean anything unless there’s a color behind it; its main job is to tell the graphics processor how transparent the pixel is, and thus, how much of the color beneath it should show through.

You’ll get to dive into depth when you work through the section on blending.

To conclude this section, an image is a collection of pixels, and each pixel is encoded to display a single color. For this lesson, you’ll work with 32-bit RGBA.

Note: Have you ever wondered where the term Bitmap originated? A bitmap is a 2D map of pixels, each one comprised of bits! It’s literally a map of bits. Ah-ha!

Note: Have you ever wondered where the term Bitmap originated? A bitmap is a 2D map of pixels, each one comprised of bits! It’s literally a map of bits. Ah-ha!

So, now you know the basics of representing colors in bytes. There are still a three more concepts to cover before you dig in and start coding.

Color Spaces

The RGB method to represent colors is an example of a colorspace. It’s one of many methods that stores colors. Another colorspace is grayscale.

As the name entails, all images in the grayscale colorspace are black and white, and you only need to save one value to describe its color.

The downside of RGB is that it’s not very intuitive for humans to visualize.

Red: 0 Green:104 Blue:55

For example, what color do you think an RGB of [0, 104, 55] produces?

Taking an educated guess, you might say a teal or skyblue-ish color, which is completely wrong. Turns out it’s the dark green you see on this website!

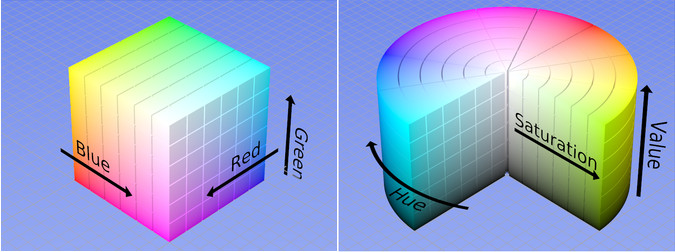

Two other more popular color spaces are HSV and YUV.

HSV, which stand for Hue, Saturation and Value, is a much more intuitive way to describe colors. You can think of the parts this way:

- Hue as “Color”

- Saturation as “How full is this color”

- Value, as the “Brightness”

In this color space, if you found yourself looking at unknown HSV values, it’s much easier to imagine what the color looks like based on the values.

The difference between RGB and HSV are pretty easy to understand, at least once you look at this image:

Image modified from work by SharkD under the Creative Commons Attribution-Share Alike 3.0 Unported license

Image modified from work by SharkD under the Creative Commons Attribution-Share Alike 3.0 Unported license

YUV is another popular color space, because it’s what TVs use.

Television signals came into the world with one channel, Grayscale. Later, two more channels “came into the picture” when color film emerged. Since you’re not going to tinker with YUV in this tutorial, you might want to do some more research on YUV and other color spaces to round out your knowledge. :]

Note: For the same color space, you can still have different representations for colors. One example is 16-bit RGB, which optimizes memory use by using 5 bits for R, 6 bits for G, and 5 bits for B.

Why 6 for green, and 5 for red and blue? This is an interesting question and the answer comes from your eyeball. Human eyes are most sensitive to green and so an extra bit enables us to move more finely between different shades of green.

Note: For the same color space, you can still have different representations for colors. One example is 16-bit RGB, which optimizes memory use by using 5 bits for R, 6 bits for G, and 5 bits for B.

Why 6 for green, and 5 for red and blue? This is an interesting question and the answer comes from your eyeball. Human eyes are most sensitive to green and so an extra bit enables us to move more finely between different shades of green.