Building an Action for Google Assistant: Getting Started

In this tutorial, you’ll learn how to create a conversational experience with Google Assistant. By Jenn Bailey.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Building an Action for Google Assistant: Getting Started

30 mins

- VUI and Conversational Design

- Designing an Action’s Conversation

- Getting Started

- Checking Permissions Settings

- Creating the Action Project

- Creating the Dialogflow Agent

- Agents and Intents

- Running your Action

- Modifying the Welcome Intent

- Testing Actions on a Device

- Uploading the Dialogflow Agent

- Fulfillment

- Setting up the Local Development Environment

- Installing and Configuring Google Cloud SDK

- Configuring the Project

- Opening the Project and Deploying to the App Engine

- Implementing the Webhook

- Viewing the Stackdriver Logs

- Creating and Fulfilling a Custom Intent

- Creating the Intent

- Fulfilling the Intent

- Detecting Surfaces

- Handling a Confirmation Event

- Using an Option List

- Entities and Parameters

- Entities

- Action and Parameters

- Using an Entity and a Parameter

- Where to Go From Here?

Entities and Parameters

A Training Phrase often contains useful data such as words or phrases that specify things like quantity or date. You use Parameters to represent this information. Parameters in training phrases are represented by an Entity. Entities identify and extract useful data from natural language inputs in Dialogflow.

Entities

Entities extract information such as date, time, color, ordinal number and unit.

The Entity Type defines the type of extracted data. Each parameter will have a corresponding Entity.

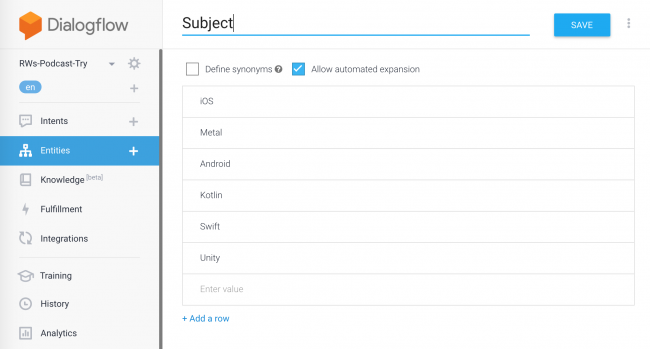

For each type of entity, there can be many Entity Entries. Entries are a set of equivalent words and phrases. Sample entity entries for the subject of a technical podcast might be iOS, iPhone, and iPad.

System Entities are built in to Dialogflow. Some system entities include date, time, airports, music artists and colors.

For a generic entity type, use Sys.Any, representing any type of information. If the system entities are not specific enough, define your own Developer Entities.

Below is an example of a developer entity defined to represent the subject of a technical podcast.

You can see the @Subject Entity created when you uploaded the sample project by selecting Entities in the left pane.

Action and Parameters

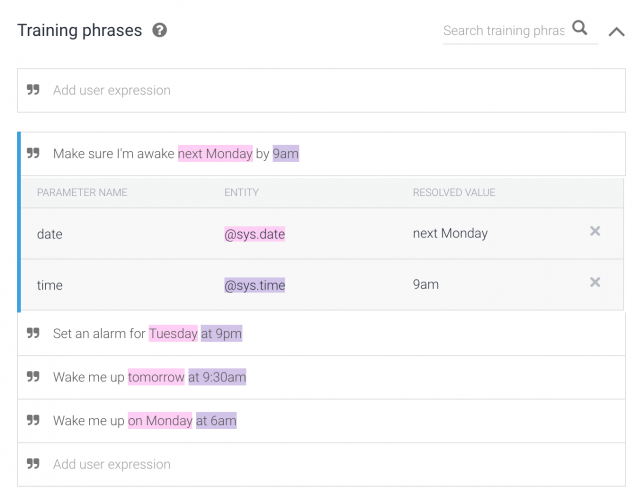

A training phrase recognized as an entity is highlighted and designated a parameter, and then it appears in a table below the training phrases.

Dialogflow automatically identifies and tags some more common types of system entities as parameters.

In the example below, Dialogflow identifies the time and date parameters and assigns them the correct type.

You can see an example using podcast subjects be going to Intents ▸ play_an_episode_about.

In Dialogflow, Action and Parameters are below the training phrases of an intent.

There are a couple of attributes for parameters:

- A Required checkbox to determine whether the parameter is necessary.

- The Parameter Name identifies the parameter.

- The Entity or type of the parameter.

- The Value of the parameter refers to the parameter in responses.

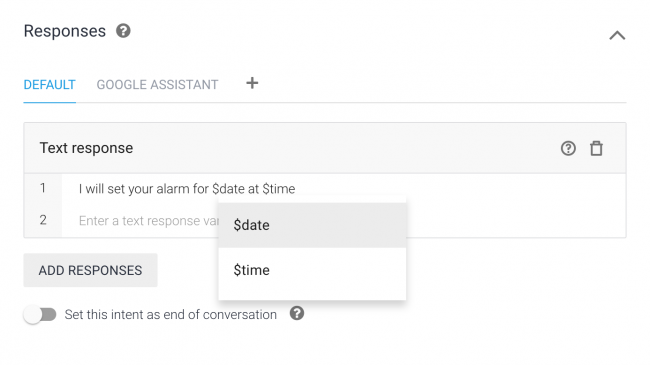

Parameters can appear as part of a text response:

Using an Entity and a Parameter

Time to try using the Entities and Parameters you learned about! In Dialogflow, make sure the webhook on the play_an_episode_about intent is enabled. Then add the following code to the intent handler RWPodcastApp.kt:

val platform = request.getParameter(subject) as String

val episodes = jsonRSS.getJSONObject(rss).getJSONObject(channel)

.getJSONArray(item)

var episode : JSONObject? = null

for (i in 0 until episodes.length()) {

val currentEpisode = episodes.getJSONObject(i)

if(platform.toUpperCase() in currentEpisode.optString(title).toUpperCase()) {

episode = currentEpisode

break

}

}

if(episode != null) {

val mediaObjects = ArrayList<MediaObject>()

mediaObjects.add(

MediaObject()

.setName(episode.getString(title))

.setDescription(getSummary(episode))

.setContentUrl(episode.getJSONObject(enclosure).getString(audioUrl))

.setIcon(

Image()

.setUrl(logoUrl)

.setAccessibilityText(requestBundle.getString("media_image_alt_text"))))

responseBuilder

.add(episode.getString(title))

.addSuggestions(suggestions)

.add(MediaResponse().setMediaObjects(mediaObjects).setMediaType("AUDIO"))

} else {

responseBuilder.add("There are no episodes about $platform")

.addSuggestions(suggestions)

}

The code above searches the feed for the newest episode. Here’s how:

- The code looks for the value of the

Subjectparameter. - The user utterance provides the parameter.

- The Entity defined in Dialogflow provides the utterance.

The first podcast containing the Subject keyword will be passed into the MediaObject and played.

Where to Go From Here?

Wow, that was a lot of work! You’re awesome!

In this tutorial, you created a Google Action in the Google Actions Console and utilized Dialogflow to design a conversation for that action. You provided fulfillment for the action by implementing a webhook.

Get the final project by clicking the Download Materials button at the top or bottom of this tutorial. If you want to keep moving forward with this, here are some suggestions:

- You can learn more about publishing your action here.

- Within 24 hours of publishing your action, the Analytics section of the Actions Console will display the collected data. Use the Analytics section to perform a health check and get information about the usage, health and discovery of the action. Learn more here.

- Want to go even deeper into Google assistant? Google has an extensive Conversation Design Guide. Find Google Codelabs about the Assistant here and a few Google Samples here. Google has a complete documentation.

If you have any questions or comments, please join the forum discussion below!